What is evaluation?

Evaluation is a systematic investigation to assess how well a project is meeting its goals.

Why should you evaluate your project?

Evaluation will:

- Monitor quality

- Gather evidence on impacts

- Document results and findings

- Provide publishable data

Where do you start?

It is critical to the success of a project to articulate and carefully craft the project goals and objectives. Project goals should be SMART: Specific, Measurable, Attainable, Relevant, and Time Based.

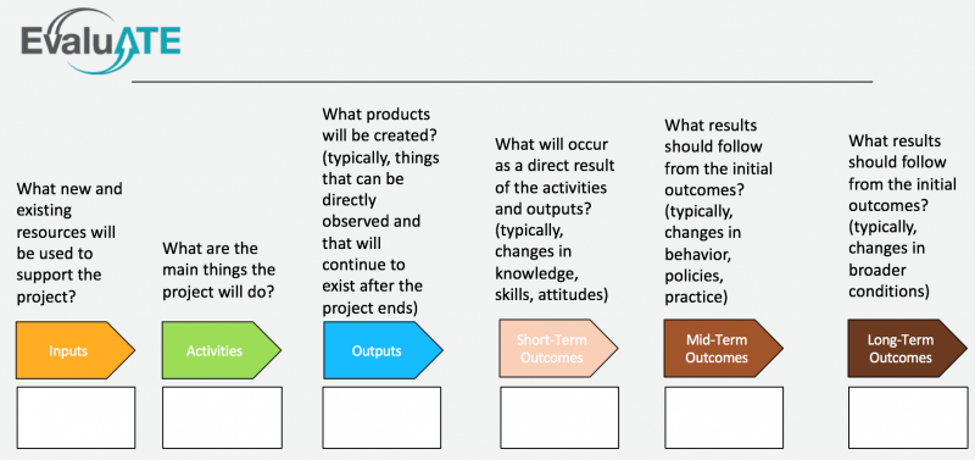

A logic model, such as the example below, is a visual representation that can help align your project activities with the project goals and identify ways to measure the outcomes.

Image credit: www.Evalu-ATE.org

How do you develop an evaluation plan?

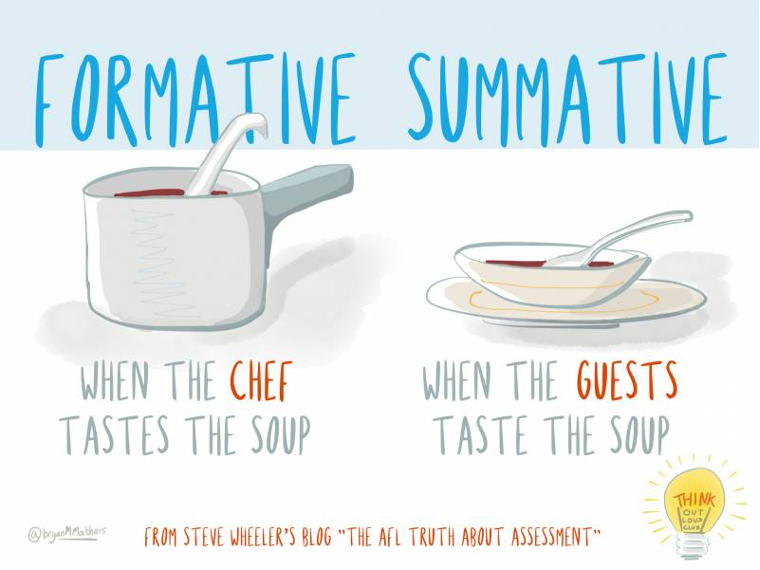

An evaluation plan is guided by the project’s logic model, theory of change, or evaluation matrix. Evaluation can include both formative and summative assessment.

Examples of evaluation activities include:

- Surveys

- Interviews

- Focus groups

- Observations

- Pretest to posttest assessments

- Web analytics

- Tracking

What else should I know?

Evaluation often requires approval from the institutional review board (IRB) of the institution that performs the study. The IRB board reviews all studies that include human subjects. IRB boards will usually rate evaluation studies as exempt (or even as non-research), requiring minimal oversight of the evaluation process. The IRB board ensures that data is de-identified before sharing, protect the rights and privacy of study participants, and holds the evaluation team to a high standard of data management and storage. If you are working with us at CIRES, our evaluation team will request IRB approval.